Svitlana Vakulenko, presented a paper that she wrote together with Michael Coches, Maarten de Riijke, Axel Polleres and Vadim Savenkov at the International Semantic Web Conference 2018 held in October 2018 in Monterey, California. Here is an excerpt from Svitlana’s blog:

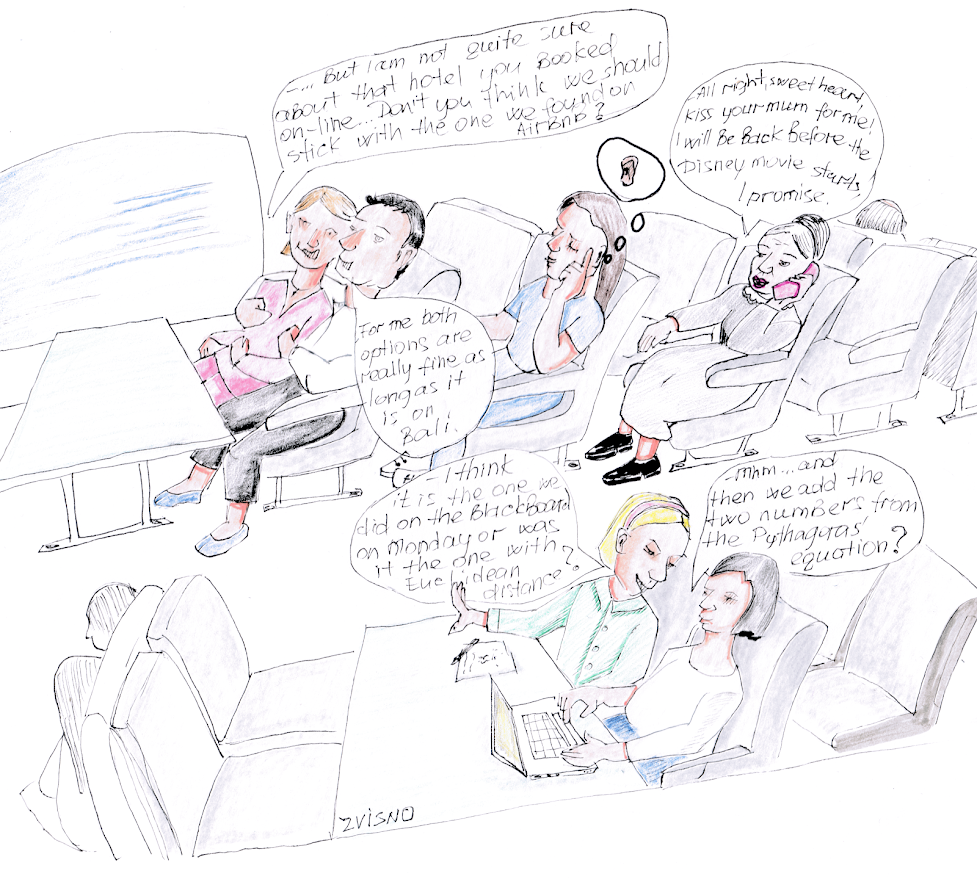

Imagine: you sit in a train, in which many different conversations are going on at the same time. A couple next to the window is planning their honey-moon trip; the girls at the table are discussing their homework; a granny is in a call with her great-grandson. You close your eyes and try to recover who is talking to whom by paying attention to the content of the conversations and not to the origin of the sound waves:

— Mhm .. and then we add the two numbers from the Pythagoras’ equation?

— … but I am not quite sure about that hotel you booked on-line… Don’t you think we should stick with the one we found on Airbnb?

— All right, sweetheart, kiss your mum for me! I will be back before the Disney movie starts, I promise.

— I think it is the one we did on the blackboard on Monday or was it the one with Euclidean distance?

— For me both options are really fine as long as it is on Bali.

It is relatively easy to tell the three conversations apart. We hypothesize that this is due to certain semantic relations between utterances from the same dialogue that make it meaningful, or coherent, which brought us to the following set of questions:

- What are the relations between the words in a dialogue (or rather the concepts they represent) that make a dialogue semantically coherent, i.e. making sense? and

- Can we use available knowledge resources (e.g. a knowledge graph) to tell whether a dialogue makes sense?

The later is particularly important for dialogue systems that need to correctly interpret the dialogue context and produce meaningful responses.

To study these two questions we cast the semantic coherence measurement task as a classification problem. The objective is to learn to distinguish real (mostly coherent) dialogues from artificially generated dialogues, which were made incoherent by design. Intuitively, the classifier is trained to assign a higher score to the coherent dialogues and a lower score to the incoherent (corrupted) dialogues, so that the output score reflects the degree of coherence in the dialogue.

We extended the Ubuntu Dialogue Corpus, which is a large dialogue dataset containing almost 2M dialogues extracted from IRC (public chat) logs, with generated negative samples to provide an evaluation benchmark for the coherence measurement task. We came up with 5 different ways to generate negative samples, i.e. incoherent dialogues by a) sampling the vocabulary (1. uniformly at random; 2. according to the corpus-specific distribution) and b) permutations of the original dialogues (3. shuffling the sequence of entities, or combining two different dialogues via 4. horizontal and 5. vertical splits).

We also implement and evaluate three different approaches on this benchmark.

Two of them are based on a neural network classifier (Convolutional Neural Network) using word or, alternatively, Knowledge Graph embeddings; and the third approach is using the original Knowledge Graph (Wikidata+DBpedia converted to HDT) to induce a semantic subgraph representation for each of the dialogues.

Read the full story in Svitlana’s blog!