Objectives

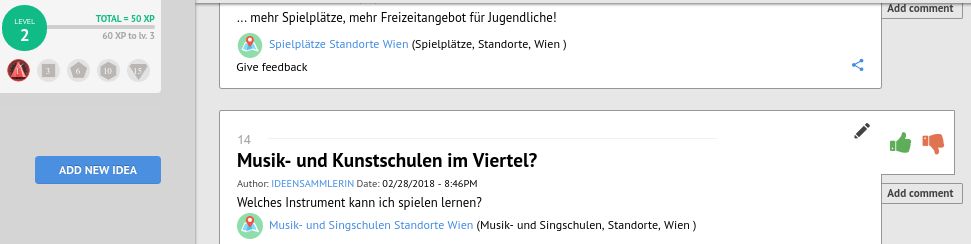

The ultimate goal of the project is to make Open Data more accessible for non-expert users. To this end, we improve the open dataset search (see the current prototype) to make it aware of geo- and temporal data properties, found not only in the metadata but in the data itself. We use the search to connect online participation tools, in particular discuto.io, with Open Data by embedding dataset recommendations and search in discussions to facilitate the discovery of open datasets and to support embeddable data citations.

We also prototype an infrastructure for streamlined publishing and collaborative management of open datasets. Our solution is based on GitLab, but will enable easier workflows for single file modifications, and will focus specifically on tabular (CSV) data.

Online discussions and datasets are placed in the larger web scale context by the webLyzard patform which integrates, analyzes and visualizes media sources at Web Scale.

Please sign up for the project newsletter to get early access to the developed tools and receive news about the project.

The CommuniData pilot runs under the name Expedition Stuwerviertel Living Lab in a part of the Vienna’s Second District, lead by Urban Renewal Office GB* Office for Districts 1,2,7,8,9, 20 . Our methodology in studying the role of Open Data in raising eParticipation is partly based on Bewextra, the framework for need knowledge developed in the Knowledge Management Group of WU Vienna.

Project Structure

The project is lead by Vienna University of Economics and Business (WU) jointly by the Institute of Production management and the Institute of Information Business, with Community-based innovation Systems GmbH (cbased) as a technology provider of the online discussion platform discuto.io, webLyzard technology gmbh addressing the visualization and Web Intelligence aspects, and DI Andrea Mann, representing a Vienna Urban Renewal Office GB* Office for Districts 1,2,7,8,9, 20 as pilot lead.